For a little under $8,000 you can have your own very capable private AI at home that you can feed data to and get answers from.

It’s not hard to do, it’ll put you out at the cutting edge, and I think this offers significant advantages to those who follow through with it. This guide talks about what the machine consists of, how to put it together, what you might use it for, and what is still fantasy.

This is written for us semi-normal people interested in AI. We aren’t going to build our own LLM from scratch and we may not code for a living. We’ve used ChatGPT or Perplexity and think it’s neat, and we can copy/paste commands into a CLI. We may have never built our own PC but that’s fine; I hadn’t either.

We’re willing to read a bit and muck about, but in general we don’t have time to develop deep domain expertise; we just want something that mostly works and puts us out at the forefront of the AI revolution.

You can also spend less if you want. My budget was $8k so that’s what I used. $3k is probably as low as you can go and get reasonable performance, but I’m sure some wizard out there will let me know in the comments that if I just re-ionize toothpaste and flame-char duct tape to exactly 417.314 fahrenheit then smelt my own iron, I could do the whole thing for $5.

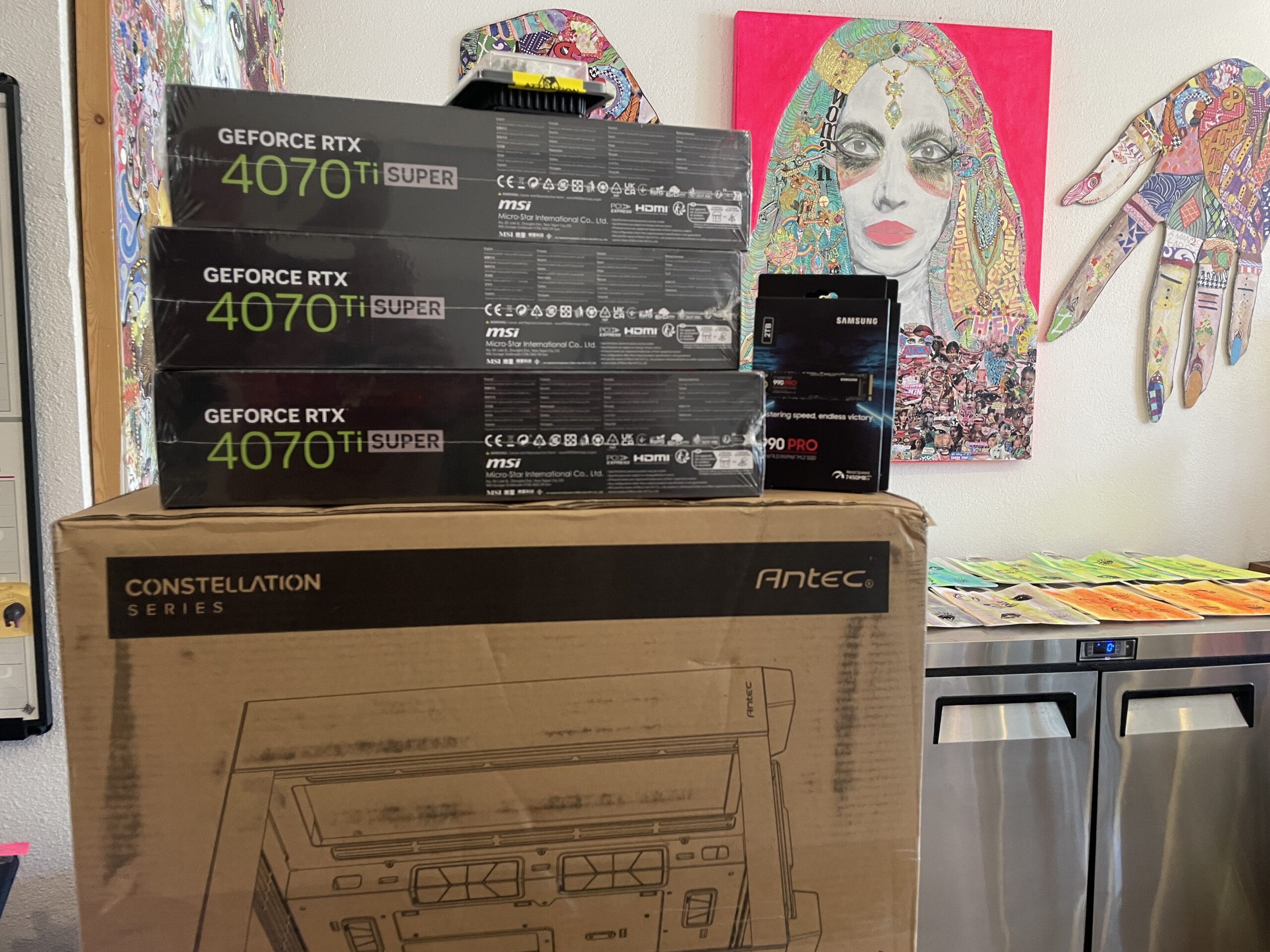

I didn’t do that, just spent the money, enjoyed building an excellent thing, and called it “Monstra”.

The GPUs are a little too close together to run for long term comfort, but for relatively short tasks the thing is awesome.

Yes, you can run an LLM on your home computer right now; it’ll just be a lot slower than a purpose-built monster. “Monster” is relative; for a home setup this is on the “overbuilt” side. Compared to top of the line massive racks of A100s or H100s, this thing is puny.

Ok, so what do you get out of building your own personal AI machine?

- Privacy in your queries. Maybe you don’t want OpenAI to know about the product you’re building that you need help coding.

- Related to this, you get the ability to use an AI trained on data that you might not want to share, like tax records or shipping information for every sale your company has ever made.

- You get flexibility in setting it up exactly how you want it; how much “horsepower” you use, how creative you want your responses to be, and specific things to focus on.

- You get to experiment on the cutting edge, and find out how much you can actually do and how much is still you fantasizing about having your own drysine queen.

- You can also participate in renting out spare capacity.

I’ll start with what I’ve been experimenting with, then we’ll get into the details of the build and how to set it all up. This is not (by a long shot) all you could do, these are just some of the problems I’d like to solve for myself.

Training Plans (race prep, overall fitness, etc)

A few years ago, I hired a private high-end coach to help me build a training plan for a hike ‘n fly paragliding race I was preparing for. This is a former Olympian who ran strength and conditioning at Red Bull. I’ll admit it; it was probably a lot more coaching horsepower than I needed.

He charges $800 a month for what I wanted, which is a customized plan with unlimited calls to an elite coach and alterations to continually make the changes required of any training plan. We spoke every day, it was awesome, and he was worth every penny. Still, $800 is $800.

Now, that’s on the expensive side. If you want excellent coaching you could hire the folks over at Evoke Endurance, who charge $350/month for a similar service.

If you had a private AI, you could take PDFs of all the books that Scott Johnston over at Evoke has written, transcribe all the Evoke (and Uphill Athlete) podcasts, add in whatever other content then you want, then feed it to your AI and let it help you develop your own custom training plan.

If you wanted to be a little entrepreneurial, you could open that up as a service for others. It won’t be quite as easy as I’m making it sound, as you’ll need a RAG and nerdy vectorDB skills, but with AnythingLLM you can probably pull this off for yourself with some elbow grease.

Let me be clear here: As of July 2024, the AI won’t be as good as a professional coach. In fact, when you start it may be worse than a bad coach. Still, I’m *guessing* that’ll change by December of 2024 (the ol’ “6 months in AI is a new century” rule). That’s a guess and I’m likely to be wrong.

LiveChat Agent

Since 2009, my wife and I have run Paleo Treats which has both an online store and a brick and mortar shop. Since 2014 we’ve used LiveChat to chat with folks who visit the website. If you’ve ever been on the PT site and opened up a chat, you were talking to me or my wife. That’s cool, but…we turn off the chat when we sleep, and throughout the day when we have other things to do.

LiveChat offers an AI service called ChatBot that starts at $52/month. With our HomeAI setup, we can replace that with a custom agent and have more control (and learn way more about setting those up) to give super customized chat experiences to folks who want to buy gluten, grain, and dairy free desserts online.

Taxes

Having been in crypto since 2014 or so, it’s always a discussion with our tax preparer every year; “What are these token-things? Why are you still doing them? There’s not any great guidance on this…”

Because taxes are about money and money is so personal, I’m not sure I want to be uploading all my financial docs to every tax site promising “We do it with AI!” out there.

I’d much rather feed my private AI the US tax code (which you can find here, Title 26), give it my last 10 years of returns, then work through the current year with the overwatch of an AI knowing that eventually, as long as I put in my data correctly, I’ll be able to do my taxes far more efficiently with my own personal consultant that knows every aspect of my finances.

Again, this won’t be easy or perfect (or maybe even that helpful) right now, but if I start working on this now, I’m guessing by the next time I need to submit taxes it’ll be a button push, a few questions, and perhaps the best tax prep experience I’ve ever had.

Our current tax preparer charges about $6,000 per year to integrate 3 businesses, personal taxes and my various crypto adventures.

Inventory & Operations

Again, as part of the Paleo Treats business, we’re always ordering ingredients and consumables, doing production runs, then packing and shipping out items. I’ve built a series of Python scripts to help keep track of costs, but the ability to start to integrate all of our Operations data into an LLM and ask “How many packages have we been shipping to Sugarland Texas this month, and how does that compare to last year” or “Take a look at this giant csv of every package we’ve shipped over the last 10 years and tell me which city gets the most packages” etc etc starts to make finding opportunities much more efficient.

Yes, I know you can do that with Excel or Google sheets. I think the ability to talk to a personal and secure AI starts to change the game for small businesses who aren’t necessarily data wizards but still have legitimate questions about operations opportunities.

Funzies — Grinding a Vanity Solana Address

I stumbled on a post by Nick Frostbutter on how to “grind” a Solana vanity address using a built-in command in the Solana CLI (Command Line Interface, which is where nerds go to talk to computers.). That particular set of instructions uses your CPU. With the machine I’d built, the CPU was pretty powerful (gear list below), but the whole reason I got into this was I wanted the GPU capability for LLMs, so…why not use the GPUs?

My buddy nosmaster found this Github repo on how to use GPUs to grind, and after a full weekend of fiddling about with various settings, I managed to grind out a gking... Solana address in about 45 minutes. I know, I know, it’s good for almost nothing and you don’t get a mnemonic phrase (the “twelve words”), just a barely decipherable private key, but it was a fun use of Monstra.

The Build, Part 1: Understand What Everything Does

Before we get to a parts list, I thought it’d be useful to have an overview of what everything does. It was all a mystery to me when I started, and just knowing what each thing does has been helpful in grasping the overall concept.

Every computer we build for AI will have 8 constituent parts:

| CPU — Central Processing Unit: This is the “brain” of the thing. |

| GPU — Graphical Processing Unit: Fundamentally, this is what gives the AI its horsepower. |

| RAM — Random Access Memory — Think of this as the working memory of the device. |

| Storage — This is the long term memory. Not as fast as RAM, but way more of it. |

| Motherboard — The coordinator of all the other parts, and the physical structure they all plug into. |

| Power Supply — Making sure everything stays on without flickering. |

| Case — Big enough and airy enough to keep everything proteced and cool. |

| Cooler — Fans to make sure the CPU specifically is cooled off. |

| Additional Cooling Fans — Drives air through the whole case to keep all parts cool. |

| Other nerd tools — Thermal paste, screwdriver, cable management, etc. |

The GPUs are critical for making the LLMs actually work (at a reasonable speed). The CPU manages overall workflow, and the RAM supports large datasets. Everything else supports that. Of course, you can scale up (or down) from here.

Ok, so with the basic stuff out of the way, what should you buy and how does it go together?

The Build, Part 2: Buy & Assemble Your HomeAI

I’ll start by saying that I’m impatient and Amazon is easy, so I bought everything there. I’m sure if you hunt around and have some patience, you can save yourself some money.

All up it ran just under $8k. Of course, if you want someone to build it for you, Gigabyte is offering similar builds starting at $11k. You can also check out a Google sheet I put together for different “size” builds from $3k — $8k. The “V3” on that list is Monstra.

I ordered everything on a Monday and, being Amazon, it was all there by Thursday including hiccups.

Assembly was more or less straightforward, though I had significant help from Vortal, nosmaster, and Rob who are all part of the mysterious advanced tech exploration group called AppleFuckers over on the GK Discord.

Each part comes with plenty of guides and manuals, and from my limited experience, if you just follow the Motherboard manual it’ll walk you through everything you need to do.

Still, if this is your first build, I’d find a computer nerd and have ’em help you with it; many things are not obvious, and having an experienced guide to walk you through updating the BIOS (if that’s even needed) is super helpful.

What To Expect The First Week

First off, know that you’re an experimenter. Nothing will work out of the box; everything requires the ability to use the CLI to do basic install stuff.

Still, if you can search the internet, copy/paste, and use ChatGPT to get you going, you’ll be up and running your own private AI in just a few days.

It won’t literally take that long, as installing these things once you know how is about a ten minute job, BUT…learning how to do it, what works and what doesn’t, and what you should and shouldn’t pay attention to takes time.

Here’s what I loaded up and used the first week:

- Ollama (with a bunch of different models)

- OpenWebUI

- AnythingLLM

- Stable Diffusion

Each of those is its own “flavor” of AI, or in the case of OpenWebUI and AnythingLLM, a way to interact with an LLM.

About that RAG

If you want to add in your own content (like the US Tax Code or your shipping info or the contents of a book), you’ll use a RAG, or Retrieval Augmented Generation. I’ll start off by saying this: The *concept* of a RAG is easy; add in the customized thing you want your AI to reference when answering your questions. The *application* of getting the thing to work is the sticky bit.

AnythingLLM makes it pretty easy to add in documents, though it’s not ultra clear how to assign them to the specific model/thread you’re working on. OpenWebUI is good for a few docs, but not so good with a ton of docs, and doesn’t really give citations (the way, say, Perplexity does).

The use of RAGs is still well ahead of “easy”. The data you feed the AI has to be clean and well structured, and frankly, most of us humans are walking around with such a mess in our heads regarding how we organize and understand our own data it can be a mind-bender to translate that to your machine.

Still, it’s been VERY useful for my own thinking to move through the steps of introducing data to any of the LLMs I’ve worked with.

Next Steps

Rather than giving you a step by step of exactly what to do with each LLM, I’d encourage you to explore this AI jungle on your own. Know that other folks (like me!) are out in the jungle with ya and we’re all learning how to interact with our own private AI in our own way.

So far, of all the things I wanted to do, none have come out the way I want. I’d pretty much expected that. Still, I’m getting exactly what I wanted in the form of hands-on experience working with and around a personal AI and seeing both the opportunities and the limitations.

I found the following two YouTube videos helpful when I was starting out:

How To Use Your Local AI (TechnoTim vid)

Self Hosted AI That’s Actually Useful

If you’re experimenting with this as well I’d love to hear about it! If you buy a bunch of parts and need help, jump in the GK Discord server, there’s usually someone awake and willing to point you in the right direction.

See ya on the other(AI) side!

Leave a Reply